RKE简介

- Rancher Kubernetes Engine,简称 RKE,是一个经过 CNCF 认证的 Kubernetes 安装程序。RKE 支持多种操作系统,包括 macOS、Linux 和 Windows,可以在裸金属服务器和虚拟服务器上运行

- 市面上的其他 Kubernetes 部署工具存在一个共性问题:在使用工具之前需要满足的先决条件比较多,例如,在使用工具前需要完成安装 kubelet、配置网络等一系列的繁琐操作。而 RKE 简化了部署 Kubernetes 集群的过程,只有一个先决条件:只要您使用的 Docker 是 RKE 支持的版本,就可以通过 RKE 安装 Kubernetes,部署和运行 Kubernetes 集群

- RKE 既可以单独使用,作为创建 Kubernetes 集群的工具,也可以配合 Rancher2.x 使用,作为 Rancher2.x 的组件,在 Rancher 中部署和运行 Kubernetes 集群

集群部署说明

- 本次部署教程使用 RKE 单机模式( v1.3.24 版本),部署 rancher 封装的 1.23.16-rancher2-3 K8S 版本,系统初始化脚本基于 Rocky Linux 8.10 官方发布原始镜像版本

- 采用2台虚拟机部署 K8S 集群,分别是 Master 节点(192.168.2.71/24)、Worker 节点(192.168.2.72/24) RKE 和 Master 节点复用,1 台机器部署 Rancher 应用 (192.168.2.77/24)

系统要求

-

RKE 可以在大多数已安装 Docker 的 Linux 操作系统上运行。SSH 用户 - 使用 SSH 访问节点的用户必须是节点上 docker 用户组的成员

-

禁用所有节点上的交换功能(Swap),建议新部署的操作系统直接不划分交换分区

-

请查看网络插件文件,了解任何额外的要求(例如,内核模块)

-

修改 sysctl 与加载内核模块,下面列举了一些核心参数配置,基于内核优化请参考文档中初始化脚本内容

# 设置所需的 sysctl 参数,参数在重新启动后保持不变 cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 # 开启网络转发 EOF cat <<EOF | sudo tee /etc/modules-load.d/modules.conf br_netfilter # 启用Netfilter 框架,允许对桥接网络中的流量进行过滤和 NAT overlay # 支持 Overlay 虚拟网络 EOF -

本次部署教程基于 Rocky Linux 8.10 版本,内核更新至最新 LTS 版本

软件要求

- 请参考 RKE 版本说明 ,获取每个版本的 RKE 支持的 Kubernetes 版本

- 每一个 Kubernetes 版本支持的 Docker 版本都不同,详情请参考 Kubernetes 的版本说明

系统初始化脚本

#!/bin/bash

#*************************************************************************************************************

#Author: kubecc

#Date: 2024-09-08

#FileName: rocky8_init_k8s.sh

#blog: www.kubecc.com

#Description: 本脚用于 k8s 部署前系统初始化工作,基于rocky Linux 8.10版本

#Copyright (C): 2024 All rights reserved

#*************************************************************************************************************

COLOR="echo -e \\033[01;31m"

END='\033[0m'

set_mirror() {

echo "设置阿里云软件源..."

sed -e 's|^mirrorlist=|#mirrorlist=|g' \

-e 's|^#baseurl=http://dl.rockylinux.org/$contentdir|baseurl=https://mirrors.aliyun.com/rockylinux|g' \

-i.bak \

/etc/yum.repos.d/Rocky-*.repo

rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org && dnf install -y https://www.elrepo.org/elrepo-release-8.el8.elrepo.noarch.rpm

sed -i 's/mirrorlist=/#mirrorlist=/g' /etc/yum.repos.d/elrepo.repo && sed -i 's#elrepo.org/linux#mirrors.tuna.tsinghua.edu.cn/elrepo#g' /etc/yum.repos.d/elrepo.repo

dnf config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

dnf clean all && dnf makecache

echo "设置完成,更新源缓存!"

}

install_software() {

echo "正在安装常用工具和服务..."

dnf install telnet lsof vim git unzip wget curl tcpdump bash-completion net-tools epel-release rsyslog dnsutils chrony ipvsadm ipset sysstat conntrack libseccomp perl -y

echo "软件安装完成!"

}

update_kernel() {

echo "更新内核版本..."

dnf --disablerepo=\* --enablerepo=elrepo-kernel list kernel* | grep kernel-lt

sleep 10

dnf --disablerepo=\* --enablerepo=elrepo-kernel install -y kernel-lt.x86_64 kernel-lt-devel.x86_64

echo "当前默认启动内核:$(grubby --default-kernel)"

}

datetime_chrony() {

echo "正在配置时间同步..."

sed -i '/^pool 2.rocky.pool.ntp.org iburst/d' /etc/chrony.conf

echo "server ntp.aliyun.com iburst" >>/etc/chrony.conf

echo "server ntp.tuna.tsinghua.edu.cn iburst" >>/etc/chrony.conf

systemctl enable --now chronyd

chronyc -a makestep

echo "当前系统时间:$(date)"

}

disable_firewall_selinux() {

echo "正在关闭并禁用防火墙..."

systemctl disable --now firewalld

echo -n "防火墙状态:" && echo -n $(firewall-cmd --state)

echo "正在设置SELinux为disabled状态..."

sed -ri 's#(SELINUX=).*#\1disabled#' /etc/selinux/config && setenforce 0

echo -n "当前SELinux状态:" && getenforce

}

kernel_system_config() {

echo "优化文件"

echo -e "* soft nofile 65536\n* hard nofile 131072\n* soft nproc 65535\n* hard nproc 655350\n* soft memlock unlimited\n* hard memlock unlimited" >>/etc/security/limits.conf

echo "验证配置结果......"

cat /etc/security/limits.conf | grep -v "#"

cat <<EOF | tee /etc/modules-load.d/modules.conf

overlay

br_netfilter

EOF

cat <<EOF | tee /etc/modules-load.d/ipvs.conf

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

systemctl enable --now systemd-modules-load.service

}

add_k8s_conf() {

cat <<EOF >/etc/sysctl.d/k8s.conf

# 1.文件系统相关参数

fs.may_detach_mounts = 1

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

# 2.内存相关参数

vm.panic_on_oom=0

vm.overcommit_memory=1

# 3.网络相关参数

net.ipv4.conf.all.route_localnet = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

net.netfilter.nf_conntrack_max=2310720

EOF

sysctl --system

}

execute_all() {

set_mirror

install_software

update_kernel

datetime_chrony

disable_firewall_selinux

kernel_system_config

add_k8s_conf

}

restart_os(){

echo "正在重启服务器,请稍后重新尝试连接服务器..."

shutdown -r now

}

menu() {

while true; do

echo -e "\E[$((RANDOM % 7 + 31));1m"

cat <<-EOF

********************************************************************

* k8s初始化脚本菜单

* 1.设置源

* 2.安装必要常用软件

* 3.更新内核

* 4.chrony时间同步

* 5.关闭防火墙&禁用SELinux

* 6.内核优化配置

* 7.添加k8s配置文件

* 8.全部执行

* 9.重启服务器

* 10.退出脚本

********************************************************************

EOF

echo -e '\E[0m'

read -p "请选择相应的编号(1-8): " choice

case ${choice} in

1)

set_mirror

;;

2)

install_software

;;

3)

update_kernel

;;

4)

datetime_chrony

;;

5)

disable_firewall_selinux

;;

6)

kernel_system_config

;;

7)

add_k8s_conf

;;

8)

execute_all

;;

9)

restart_os

;;

10)

break

;;

*)

${COLOR}"输入错误,请输入正确的数字(1-8)!"${END}

;;

esac

done

}

main() {

menu

}

main

安装 Docker 服务

# 根据需求安装指定的 docker 版本,本次安装 docker v20.10.24 版本

[root@localhost ~]# dnf list docker-ce.x86_64 --showduplicates | sort -r

Last metadata expiration check: 3:33:06 ago on Tue 21 Jan 2025 03:56:14 PM CST.

Installed Packages

docker-ce.x86_64 3:26.1.3-1.el8 docker-ce-stable

docker-ce.x86_64 3:26.1.2-1.el8 docker-ce-stable

docker-ce.x86_64 3:26.1.1-1.el8 docker-ce-stable

docker-ce.x86_64 3:26.1.0-1.el8 docker-ce-stable

docker-ce.x86_64 3:26.0.2-1.el8 docker-ce-stable

docker-ce.x86_64 3:26.0.1-1.el8 docker-ce-stable

docker-ce.x86_64 3:26.0.0-1.el8 docker-ce-stable

docker-ce.x86_64 3:25.0.5-1.el8 docker-ce-stable

docker-ce.x86_64 3:25.0.4-1.el8 docker-ce-stable

docker-ce.x86_64 3:25.0.3-1.el8 docker-ce-stable

docker-ce.x86_64 3:25.0.2-1.el8 docker-ce-stable

docker-ce.x86_64 3:25.0.1-1.el8 docker-ce-stable

docker-ce.x86_64 3:25.0.0-1.el8 docker-ce-stable

docker-ce.x86_64 3:24.0.9-1.el8 docker-ce-stable

docker-ce.x86_64 3:24.0.8-1.el8 docker-ce-stable

.................................省略..........................................

[root@localhost ~]# dnf install docker-ce-20.10.24 docker-ce-cli-20.10.24 containerd.io docker-buildx-plugin docker-compose-plugin

Docker CE Stable - x86_64 320 kB/s | 66 kB 00:00

Dependencies resolved.

===================================================================================================================================================================================

Package Architecture Version Repository Size

===================================================================================================================================================================================

Installing:

containerd.io x86_64 1.6.32-3.1.el8 docker-ce-stable 35 M

docker-buildx-plugin x86_64 0.14.0-1.el8 docker-ce-stable 14 M

docker-ce x86_64 3:20.10.24-3.el8 docker-ce-stable 21 M

docker-ce-cli x86_64 1:20.10.24-3.el8 docker-ce-stable 30 M

docker-compose-plugin x86_64 2.27.0-1.el8 docker-ce-stable 13 M

Installing dependencies:

container-selinux noarch 2:2.229.0-2.module+el8.10.0+1896+b18fa106 appstream 70 k

docker-ce-rootless-extras x86_64 26.1.3-1.el8 docker-ce-stable 5.0 M

fuse-overlayfs x86_64 1.13-1.module+el8.10.0+1896+b18fa106 appstream 69 k

fuse3 x86_64 3.3.0-19.el8 baseos 54 k

fuse3-libs x86_64 3.3.0-19.el8 baseos 95 k

libcgroup x86_64 0.41-19.el8 baseos 69 k

libslirp x86_64 4.4.0-2.module+el8.10.0+1896+b18fa106 appstream 69 k

slirp4netns x86_64 1.2.3-1.module+el8.10.0+1896+b18fa106 appstream 55 k

Enabling module streams:

container-tools rhel8

Transaction Summary

===================================================================================================================================================================================

Install 13 Packages

Total download size: 119 M

Installed size: 471 M

Is this ok [y/N]: y

# 启动 docker 服务

systemctl enable --now docker && docker version

# 配置镜像加速服务

[root@localhost ~]# vim /etc/docker/daemon.json

{

"registry-mirrors": [

"https://hub.littlediary.cn",

"https://docker.1ms.run"

]

}

# 重载配置,重启 docker 服务

systemctl daemon-reload && systemctl restart docker.servic配置主机名

# 1.设置主机 hostname

hostnamectl set-hostname m01 && bash # master 节点执行

hostnamectl set-hostname n01 && bash # worker 节点执行

# 2.两台主机分别加入 hosts 解析

[root@m01 ~]$ vim /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.2.71 m01

192.168.2.72 n01创建 RKE 用户并配置免密登录

# 1.创建 rke 用户并设置用户密码

useradd rke && echo 123.com|passwd --stdin rke # 注意替换为自己的密码

# 2.将 rke 用户加入到 docker 用户组,安装 docker 后可能不会有 docker 这用户组,可以使用 groupadd docker 创建

usermod -aG docker rke

# 3.为了方便后续操作,我们赋予 rke 用户 sudo 权限,此步骤视情况,谨慎操作

echo "rke ALL=(ALL) NOPASSWD: ALL" >> /etc/sudoers # rke 添加到 /etc/sudoers 文件中,赋予 rke 用户无需密码即可执行任何 sudo 命令的权限

gpasswd -a rke root # 将用户 rke 添加到 root 组

newgrp root # 切换用户 rke 的主组为 root 组

# 4. m01 节点切换到 rke 用户配置 m01 节点免密登录自己或 n01

[root@m01 ~]# su - rke

Last login: Tue Jan 21 16:02:27 CST 2025 on pts/1

[rke@m01 ~]$ ssh-keygen -t rsa -b 4096 ## 生成密钥,一路回车即可

[rke@m01 ~]$ ssh-copy-id rke@m01 # 按照提示输入 rke 用户密码

[rke@m01 ~]$ ssh-copy-id rke@n01 # 按照提示输入 rke 用户密码部署 RKE (单机版)

RKE 提供了三种下载安装包的方法:通过 GitHub、Homebrew 或 MacPorts 都可以下载 RKE 安装包,我们通过Github下载二进制安装包 Github

# 在 m01 节点上使用 root 用户执行

# 1.下载 rke 二进制包

wget https://github.com/rancher/rke/releases/download/v1.3.24/rke_linux-amd64 -O /usr/local/bin/rke

# 2.赋予执行权限

chmod +x /usr/local/bin/rke

# 3.核对版本信息

[root@m01 ~]# rke --version

rke version v1.3.24使用 RKE 生成 K8S 集群配置文件

创建集群配置文件 cluster.yml 的方式有2种:

- 使用 minimal cluster.yml 创建集群配置文件,然后将您使用的节点的相关信息添加到文件中

- 使用

rke config命令创建集群配置文件,然后将集群参数逐个输入到该文件中

我们这里使用 rke config --name cluster.yml 创建部署文件,在当前路径下创建 cluster.yml文件。这条命令会引导您输入创建集群所需的所有参数,详情请参考集群配置选项。配置过程如下:

[rke@m01 ~]$ rke config --name cluster.yml

[+] Cluster Level SSH Private Key Path [~/.ssh/id_rsa]:

[+] Number of Hosts [1]: 2

[+] SSH Address of host (1) [none]: m01

[+] SSH Port of host (1) [22]:

[+] SSH Private Key Path of host (m01) [none]:

[-] You have entered empty SSH key path, trying fetch from SSH key parameter

[+] SSH Private Key of host (m01) [none]:

[-] You have entered empty SSH key, defaulting to cluster level SSH key: ~/.ssh/id_rsa

[+] SSH User of host (m01) [ubuntu]: rke

[+] Is host (m01) a Control Plane host (y/n)? [y]: y

[+] Is host (m01) a Worker host (y/n)? [n]: n

[+] Is host (m01) an etcd host (y/n)? [n]: y

[+] Override Hostname of host (m01) [none]:

[+] Internal IP of host (m01) [none]: 192.168.2.71

[+] Docker socket path on host (m01) [/var/run/docker.sock]:

[+] SSH Address of host (2) [none]: n01

[+] SSH Port of host (2) [22]:

[+] SSH Private Key Path of host (n01) [none]:

[-] You have entered empty SSH key path, trying fetch from SSH key parameter

[+] SSH Private Key of host (n01) [none]:

[-] You have entered empty SSH key, defaulting to cluster level SSH key: ~/.ssh/id_rsa

[+] SSH User of host (n01) [ubuntu]: rke

[+] Is host (n01) a Control Plane host (y/n)? [y]: n

[+] Is host (n01) a Worker host (y/n)? [n]: y

[+] Is host (n01) an etcd host (y/n)? [n]: n

[+] Override Hostname of host (n01) [none]: n01

[+] Internal IP of host (n01) [none]: 192.168.2.72

[+] Docker socket path on host (n01) [/var/run/docker.sock]:

[+] Network Plugin Type (flannel, calico, weave, canal, aci) [canal]: calico

[+] Authentication Strategy [x509]:

[+] Authorization Mode (rbac, none) [rbac]:

[+] Kubernetes Docker image [rancher/hyperkube:v1.24.17-rancher1]: v1.23.16-rancher2-3

[+] Cluster domain [cluster.local]:

[+] Service Cluster IP Range [10.43.0.0/16]:

[+] Enable PodSecurityPolicy [n]: n

[+] Cluster Network CIDR [10.42.0.0/16]:

[+] Cluster DNS Service IP [10.43.0.10]:

[+] Add addon manifest URLs or YAML files [no]: 配置文件内容如下,可以根据需求编辑此文件

[rke@m01 ~]$ cat cluster.yml

# If you intended to deploy Kubernetes in an air-gapped environment,

# please consult the documentation on how to configure custom RKE images.

nodes:

- address: m01

port: "22"

internal_address: 192.168.2.71

role:

- controlplane

- etcd

hostname_override: ""

user: rke

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

- address: n01

port: "22"

internal_address: 192.168.2.72

role:

- worker

hostname_override: n01

user: rke

docker_socket: /var/run/docker.sock

ssh_key: ""

ssh_key_path: ~/.ssh/id_rsa

ssh_cert: ""

ssh_cert_path: ""

labels: {}

taints: []

services:

etcd:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

external_urls: []

ca_cert: ""

cert: ""

key: ""

path: ""

uid: 0

gid: 0

snapshot: null

retention: ""

creation: ""

backup_config: null

kube-api:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

service_cluster_ip_range: 10.43.0.0/16

service_node_port_range: ""

pod_security_policy: false

always_pull_images: false

secrets_encryption_config: null

audit_log: null

admission_configuration: null

event_rate_limit: null

kube-controller:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

cluster_cidr: 10.42.0.0/16

service_cluster_ip_range: 10.43.0.0/16

scheduler:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

kubelet:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

cluster_domain: cluster.local

infra_container_image: ""

cluster_dns_server: 10.43.0.10

fail_swap_on: false

generate_serving_certificate: false

kubeproxy:

image: ""

extra_args: {}

extra_args_array: {}

extra_binds: []

extra_env: []

win_extra_args: {}

win_extra_args_array: {}

win_extra_binds: []

win_extra_env: []

network:

plugin: calico

options: {}

mtu: 0

node_selector: {}

update_strategy: null

tolerations: []

authentication:

strategy: x509

sans: []

webhook: null

addons: ""

addons_include: []

system_images:

etcd: rancher/mirrored-coreos-etcd:v3.5.3

alpine: rancher/rke-tools:v0.1.88

nginx_proxy: rancher/rke-tools:v0.1.88

cert_downloader: rancher/rke-tools:v0.1.88

kubernetes_services_sidecar: rancher/rke-tools:v0.1.88

kubedns: rancher/mirrored-k8s-dns-kube-dns:1.21.1

dnsmasq: rancher/mirrored-k8s-dns-dnsmasq-nanny:1.21.1

kubedns_sidecar: rancher/mirrored-k8s-dns-sidecar:1.21.1

kubedns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

coredns: rancher/mirrored-coredns-coredns:1.9.0

coredns_autoscaler: rancher/mirrored-cluster-proportional-autoscaler:1.8.5

nodelocal: rancher/mirrored-k8s-dns-node-cache:1.21.1

kubernetes: rancher/hyperkube:v1.23.16-rancher2

flannel: rancher/mirrored-coreos-flannel:v0.15.1

flannel_cni: rancher/flannel-cni:v0.3.0-rancher6

calico_node: rancher/mirrored-calico-node:v3.22.5

calico_cni: rancher/calico-cni:v3.22.5-rancher1

calico_controllers: rancher/mirrored-calico-kube-controllers:v3.22.5

calico_ctl: rancher/mirrored-calico-ctl:v3.22.5

calico_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.22.5

canal_node: rancher/mirrored-calico-node:v3.22.5

canal_cni: rancher/calico-cni:v3.22.5-rancher1

canal_controllers: rancher/mirrored-calico-kube-controllers:v3.22.5

canal_flannel: rancher/mirrored-flannelcni-flannel:v0.17.0

canal_flexvol: rancher/mirrored-calico-pod2daemon-flexvol:v3.22.5

weave_node: weaveworks/weave-kube:2.8.1

weave_cni: weaveworks/weave-npc:2.8.1

pod_infra_container: rancher/mirrored-pause:3.6

ingress: rancher/nginx-ingress-controller:nginx-1.5.1-rancher2

ingress_backend: rancher/mirrored-nginx-ingress-controller-defaultbackend:1.5-rancher1

ingress_webhook: rancher/mirrored-ingress-nginx-kube-webhook-certgen:v1.1.1

metrics_server: rancher/mirrored-metrics-server:v0.6.1

windows_pod_infra_container: rancher/mirrored-pause:3.6

aci_cni_deploy_container: noiro/cnideploy:5.2.7.1.81c2369

aci_host_container: noiro/aci-containers-host:5.2.7.1.81c2369

aci_opflex_container: noiro/opflex:5.2.7.1.81c2369

aci_mcast_container: noiro/opflex:5.2.7.1.81c2369

aci_ovs_container: noiro/openvswitch:5.2.7.1.81c2369

aci_controller_container: noiro/aci-containers-controller:5.2.7.1.81c2369

aci_gbp_server_container: noiro/gbp-server:5.2.7.1.81c2369

aci_opflex_server_container: noiro/opflex-server:5.2.7.1.81c2369

ssh_key_path: ~/.ssh/id_rsa

ssh_cert_path: ""

ssh_agent_auth: false

authorization:

mode: rbac

options: {}

ignore_docker_version: null

enable_cri_dockerd: null

kubernetes_version: ""

private_registries: []

ingress:

provider: ""

options: {}

node_selector: {}

extra_args: {}

dns_policy: ""

extra_envs: []

extra_volumes: []

extra_volume_mounts: []

update_strategy: null

http_port: 0

https_port: 0

network_mode: ""

tolerations: []

default_backend: null

default_http_backend_priority_class_name: ""

nginx_ingress_controller_priority_class_name: ""

default_ingress_class: null

cluster_name: ""

cloud_provider:

name: ""

prefix_path: ""

win_prefix_path: ""

addon_job_timeout: 0

bastion_host:

address: ""

port: ""

user: ""

ssh_key: ""

ssh_key_path: ""

ssh_cert: ""

ssh_cert_path: ""

ignore_proxy_env_vars: false

monitoring:

provider: ""

options: {}

node_selector: {}

update_strategy: null

replicas: null

tolerations: []

metrics_server_priority_class_name: ""

restore:

restore: false

snapshot_name: ""

rotate_encryption_key: false

dns: null创建 K8S 集群

# 执行 rke up 我们就可以启动集群啦

[rke@m01 ~]$ rke up --config ./rancher-cluster.yml

INFO[0000] Running RKE version: v1.3.24

INFO[0000] Initiating Kubernetes cluster

INFO[0000] [dialer] Setup tunnel for host [m01]

INFO[0000] [dialer] Setup tunnel for host [n01]

INFO[0000] Finding container [cluster-state-deployer] on host [m01], try #1

INFO[0000] Finding container [cluster-state-deployer] on host [n01], try #1

INFO[0000] [certificates] Generating CA kubernetes certificates

INFO[0000] [certificates] Generating Kubernetes API server aggregation layer requestheader client CA certificates

INFO[0000] [certificates] GenerateServingCertificate is disabled, checking if there are unused kubelet certificates

INFO[0000] [certificates] Generating Kubernetes API server certificates

INFO[0000] [certificates] Generating Service account token key

INFO[0000] [certificates] Generating Kube Controller certificates

INFO[0001] [certificates] Generating Kube Scheduler certificates

INFO[0001] [certificates] Generating Kube Proxy certificates

INFO[0001] [certificates] Generating Node certificate

INFO[0001] [certificates] Generating admin certificates and kubeconfig

INFO[0001] [certificates] Generating Kubernetes API server proxy client certificates

INFO[0001] [certificates] Generating kube-etcd-192-168-2-71 certificate and key

INFO[0001] Successfully Deployed state file at [./cluster.rkestate]

INFO[0001] Building Kubernetes cluster

INFO[0001] [dialer] Setup tunnel for host [n01]

INFO[0001] [dialer] Setup tunnel for host [m01]

INFO[0002] [network] Deploying port listener containers

INFO[0002] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0002] Starting container [rke-etcd-port-listener] on host [m01], try #1

INFO[0002] [network] Successfully started [rke-etcd-port-listener] container on host [m01]

INFO[0002] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0003] Starting container [rke-cp-port-listener] on host [m01], try #1

INFO[0003] [network] Successfully started [rke-cp-port-listener] container on host [m01]

INFO[0003] Image [rancher/rke-tools:v0.1.88] exists on host [n01]

INFO[0003] Starting container [rke-worker-port-listener] on host [n01], try #1

INFO[0003] [network] Successfully started [rke-worker-port-listener] container on host [n01]

INFO[0003] [network] Port listener containers deployed successfully

INFO[0003] [network] Running control plane -> etcd port checks

INFO[0003] [network] Checking if host [m01] can connect to host(s) [192.168.2.71] on port(s) [2379], try #1

INFO[0003] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0004] Starting container [rke-port-checker] on host [m01], try #1

INFO[0004] [network] Successfully started [rke-port-checker] container on host [m01]

INFO[0004] Removing container [rke-port-checker] on host [m01], try #1

INFO[0004] [network] Running control plane -> worker port checks

INFO[0004] [network] Checking if host [m01] can connect to host(s) [192.168.2.72] on port(s) [10250], try #1

INFO[0004] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0004] Starting container [rke-port-checker] on host [m01], try #1

INFO[0004] [network] Successfully started [rke-port-checker] container on host [m01]

INFO[0004] Removing container [rke-port-checker] on host [m01], try #1

INFO[0004] [network] Running workers -> control plane port checks

INFO[0004] [network] Checking if host [n01] can connect to host(s) [192.168.2.71] on port(s) [6443], try #1

INFO[0004] Image [rancher/rke-tools:v0.1.88] exists on host [n01]

INFO[0005] Starting container [rke-port-checker] on host [n01], try #1

INFO[0005] [network] Successfully started [rke-port-checker] container on host [n01]

INFO[0005] Removing container [rke-port-checker] on host [n01], try #1

INFO[0005] [network] Checking KubeAPI port Control Plane hosts

INFO[0005] [network] Removing port listener containers

INFO[0005] Removing container [rke-etcd-port-listener] on host [m01], try #1

INFO[0005] [remove/rke-etcd-port-listener] Successfully removed container on host [m01]

INFO[0005] Removing container [rke-cp-port-listener] on host [m01], try #1

INFO[0005] [remove/rke-cp-port-listener] Successfully removed container on host [m01]

INFO[0005] Removing container [rke-worker-port-listener] on host [n01], try #1

INFO[0005] [remove/rke-worker-port-listener] Successfully removed container on host [n01]

INFO[0005] [network] Port listener containers removed successfully

INFO[0005] [certificates] Deploying kubernetes certificates to Cluster nodes

INFO[0005] Finding container [cert-deployer] on host [n01], try #1

INFO[0005] Finding container [cert-deployer] on host [m01], try #1

INFO[0005] Image [rancher/rke-tools:v0.1.88] exists on host [n01]

INFO[0005] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0006] Starting container [cert-deployer] on host [m01], try #1

INFO[0006] Starting container [cert-deployer] on host [n01], try #1

INFO[0006] Finding container [cert-deployer] on host [m01], try #1

INFO[0006] Finding container [cert-deployer] on host [n01], try #1

INFO[0011] Finding container [cert-deployer] on host [m01], try #1

INFO[0011] Removing container [cert-deployer] on host [m01], try #1

INFO[0011] Finding container [cert-deployer] on host [n01], try #1

INFO[0011] Removing container [cert-deployer] on host [n01], try #1

INFO[0011] [reconcile] Rebuilding and updating local kube config

INFO[0011] Successfully Deployed local admin kubeconfig at [./kube_config_cluster.yml]

WARN[0011] [reconcile] host [m01] is a control plane node without reachable Kubernetes API endpoint in the cluster

WARN[0011] [reconcile] no control plane node with reachable Kubernetes API endpoint in the cluster found

INFO[0011] [certificates] Successfully deployed kubernetes certificates to Cluster nodes

INFO[0011] [file-deploy] Deploying file [/etc/kubernetes/audit-policy.yaml] to node [m01]

INFO[0011] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0011] Starting container [file-deployer] on host [m01], try #1

INFO[0012] Successfully started [file-deployer] container on host [m01]

INFO[0012] Waiting for [file-deployer] container to exit on host [m01]

INFO[0012] Waiting for [file-deployer] container to exit on host [m01]

INFO[0012] Container [file-deployer] is still running on host [m01]: stderr: [], stdout: []

INFO[0013] Removing container [file-deployer] on host [m01], try #1

INFO[0013] [remove/file-deployer] Successfully removed container on host [m01]

INFO[0013] [/etc/kubernetes/audit-policy.yaml] Successfully deployed audit policy file to Cluster control nodes

INFO[0013] [reconcile] Reconciling cluster state

INFO[0013] [reconcile] This is newly generated cluster

INFO[0013] Pre-pulling kubernetes images

INFO[0013] Image [rancher/hyperkube:v1.23.16-rancher2] exists on host [m01]

INFO[0013] Image [rancher/hyperkube:v1.23.16-rancher2] exists on host [n01]

INFO[0013] Kubernetes images pulled successfully

INFO[0013] [etcd] Building up etcd plane..

INFO[0013] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0013] Starting container [etcd-fix-perm] on host [m01], try #1

INFO[0013] Successfully started [etcd-fix-perm] container on host [m01]

INFO[0013] Waiting for [etcd-fix-perm] container to exit on host [m01]

INFO[0013] Waiting for [etcd-fix-perm] container to exit on host [m01]

INFO[0013] Container [etcd-fix-perm] is still running on host [m01]: stderr: [], stdout: []

INFO[0014] Removing container [etcd-fix-perm] on host [m01], try #1

INFO[0014] [remove/etcd-fix-perm] Successfully removed container on host [m01]

INFO[0014] Image [rancher/mirrored-coreos-etcd:v3.5.3] exists on host [m01]

INFO[0014] Starting container [etcd] on host [m01], try #1

INFO[0014] [etcd] Successfully started [etcd] container on host [m01]

INFO[0014] [etcd] Running rolling snapshot container [etcd-snapshot-once] on host [m01]

INFO[0014] Pulling image [rancher/rke-tools:v0.1.90] on host [m01], try #1

INFO[0038] Image [rancher/rke-tools:v0.1.90] exists on host [m01]

INFO[0039] Starting container [etcd-rolling-snapshots] on host [m01], try #1

INFO[0039] [etcd] Successfully started [etcd-rolling-snapshots] container on host [m01]

INFO[0044] Image [rancher/rke-tools:v0.1.90] exists on host [m01]

INFO[0045] Starting container [rke-bundle-cert] on host [m01], try #1

INFO[0045] [certificates] Successfully started [rke-bundle-cert] container on host [m01]

INFO[0045] Waiting for [rke-bundle-cert] container to exit on host [m01]

INFO[0045] Container [rke-bundle-cert] is still running on host [m01]: stderr: [], stdout: []

INFO[0046] [certificates] successfully saved certificate bundle [/opt/rke/etcd-snapshots//pki.bundle.tar.gz] on host [m01]

INFO[0046] Removing container [rke-bundle-cert] on host [m01], try #1

INFO[0046] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0046] Starting container [rke-log-linker] on host [m01], try #1

INFO[0047] [etcd] Successfully started [rke-log-linker] container on host [m01]

INFO[0047] Removing container [rke-log-linker] on host [m01], try #1

INFO[0047] [remove/rke-log-linker] Successfully removed container on host [m01]

INFO[0047] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0047] Starting container [rke-log-linker] on host [m01], try #1

INFO[0047] [etcd] Successfully started [rke-log-linker] container on host [m01]

INFO[0047] Removing container [rke-log-linker] on host [m01], try #1

INFO[0047] [remove/rke-log-linker] Successfully removed container on host [m01]

INFO[0047] [etcd] Successfully started etcd plane.. Checking etcd cluster health

INFO[0047] [etcd] etcd host [m01] reported healthy=true

INFO[0047] [controlplane] Building up Controller Plane..

INFO[0047] Finding container [service-sidekick] on host [m01], try #1

INFO[0047] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0048] Image [rancher/hyperkube:v1.23.16-rancher2] exists on host [m01]

INFO[0048] Starting container [kube-apiserver] on host [m01], try #1

INFO[0048] [controlplane] Successfully started [kube-apiserver] container on host [m01]

INFO[0048] [healthcheck] Start Healthcheck on service [kube-apiserver] on host [m01]

INFO[0053] [healthcheck] service [kube-apiserver] on host [m01] is healthy

INFO[0053] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0053] Starting container [rke-log-linker] on host [m01], try #1

INFO[0053] [controlplane] Successfully started [rke-log-linker] container on host [m01]

INFO[0053] Removing container [rke-log-linker] on host [m01], try #1

INFO[0054] [remove/rke-log-linker] Successfully removed container on host [m01]

INFO[0054] Image [rancher/hyperkube:v1.23.16-rancher2] exists on host [m01]

INFO[0054] Starting container [kube-controller-manager] on host [m01], try #1

INFO[0054] [controlplane] Successfully started [kube-controller-manager] container on host [m01]

INFO[0054] [healthcheck] Start Healthcheck on service [kube-controller-manager] on host [m01]

INFO[0059] [healthcheck] service [kube-controller-manager] on host [m01] is healthy

INFO[0059] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0059] Starting container [rke-log-linker] on host [m01], try #1

INFO[0059] [controlplane] Successfully started [rke-log-linker] container on host [m01]

INFO[0059] Removing container [rke-log-linker] on host [m01], try #1

INFO[0060] [remove/rke-log-linker] Successfully removed container on host [m01]

INFO[0060] Image [rancher/hyperkube:v1.23.16-rancher2] exists on host [m01]

INFO[0060] Starting container [kube-scheduler] on host [m01], try #1

INFO[0060] [controlplane] Successfully started [kube-scheduler] container on host [m01]

INFO[0060] [healthcheck] Start Healthcheck on service [kube-scheduler] on host [m01]

INFO[0065] [healthcheck] service [kube-scheduler] on host [m01] is healthy

INFO[0065] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0065] Starting container [rke-log-linker] on host [m01], try #1

INFO[0065] [controlplane] Successfully started [rke-log-linker] container on host [m01]

INFO[0065] Removing container [rke-log-linker] on host [m01], try #1

INFO[0065] [remove/rke-log-linker] Successfully removed container on host [m01]

INFO[0065] [controlplane] Successfully started Controller Plane..

INFO[0065] [authz] Creating rke-job-deployer ServiceAccount

INFO[0065] [authz] rke-job-deployer ServiceAccount created successfully

INFO[0065] [authz] Creating system:node ClusterRoleBinding

INFO[0065] [authz] system:node ClusterRoleBinding created successfully

INFO[0065] [authz] Creating kube-apiserver proxy ClusterRole and ClusterRoleBinding

INFO[0065] [authz] kube-apiserver proxy ClusterRole and ClusterRoleBinding created successfully

INFO[0065] Successfully Deployed state file at [./cluster.rkestate]

INFO[0065] [state] Saving full cluster state to Kubernetes

INFO[0065] [state] Successfully Saved full cluster state to Kubernetes ConfigMap: full-cluster-state

INFO[0065] [worker] Building up Worker Plane..

INFO[0065] Finding container [service-sidekick] on host [m01], try #1

INFO[0065] [sidekick] Sidekick container already created on host [m01]

INFO[0065] Image [rancher/hyperkube:v1.23.16-rancher2] exists on host [m01]

INFO[0066] Starting container [kubelet] on host [m01], try #1

INFO[0066] [worker] Successfully started [kubelet] container on host [m01]

INFO[0066] [healthcheck] Start Healthcheck on service [kubelet] on host [m01]

INFO[0066] Image [rancher/rke-tools:v0.1.88] exists on host [n01]

INFO[0066] Starting container [nginx-proxy] on host [n01], try #1

INFO[0066] [worker] Successfully started [nginx-proxy] container on host [n01]

INFO[0066] Image [rancher/rke-tools:v0.1.88] exists on host [n01]

INFO[0067] Starting container [rke-log-linker] on host [n01], try #1

INFO[0067] [worker] Successfully started [rke-log-linker] container on host [n01]

INFO[0067] Removing container [rke-log-linker] on host [n01], try #1

INFO[0067] [remove/rke-log-linker] Successfully removed container on host [n01]

INFO[0067] Finding container [service-sidekick] on host [n01], try #1

INFO[0067] Image [rancher/rke-tools:v0.1.88] exists on host [n01]

INFO[0068] Image [rancher/hyperkube:v1.23.16-rancher2] exists on host [n01]

INFO[0068] Starting container [kubelet] on host [n01], try #1

INFO[0068] [worker] Successfully started [kubelet] container on host [n01]

INFO[0068] [healthcheck] Start Healthcheck on service [kubelet] on host [n01]

INFO[0081] [healthcheck] service [kubelet] on host [m01] is healthy

INFO[0081] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0081] Starting container [rke-log-linker] on host [m01], try #1

INFO[0082] [worker] Successfully started [rke-log-linker] container on host [m01]

INFO[0082] Removing container [rke-log-linker] on host [m01], try #1

INFO[0082] [remove/rke-log-linker] Successfully removed container on host [m01]

INFO[0082] Image [rancher/hyperkube:v1.23.16-rancher2] exists on host [m01]

INFO[0082] Starting container [kube-proxy] on host [m01], try #1

INFO[0082] [worker] Successfully started [kube-proxy] container on host [m01]

INFO[0082] [healthcheck] Start Healthcheck on service [kube-proxy] on host [m01]

INFO[0083] [healthcheck] service [kubelet] on host [n01] is healthy

INFO[0083] Image [rancher/rke-tools:v0.1.88] exists on host [n01]

INFO[0084] Starting container [rke-log-linker] on host [n01], try #1

INFO[0084] [worker] Successfully started [rke-log-linker] container on host [n01]

INFO[0084] Removing container [rke-log-linker] on host [n01], try #1

INFO[0084] [remove/rke-log-linker] Successfully removed container on host [n01]

INFO[0084] Image [rancher/hyperkube:v1.23.16-rancher2] exists on host [n01]

INFO[0084] Starting container [kube-proxy] on host [n01], try #1

INFO[0084] [worker] Successfully started [kube-proxy] container on host [n01]

INFO[0084] [healthcheck] Start Healthcheck on service [kube-proxy] on host [n01]

INFO[0087] [healthcheck] service [kube-proxy] on host [m01] is healthy

INFO[0087] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0087] Starting container [rke-log-linker] on host [m01], try #1

INFO[0088] [worker] Successfully started [rke-log-linker] container on host [m01]

INFO[0088] Removing container [rke-log-linker] on host [m01], try #1

INFO[0088] [remove/rke-log-linker] Successfully removed container on host [m01]

INFO[0090] [healthcheck] service [kube-proxy] on host [n01] is healthy

INFO[0090] Image [rancher/rke-tools:v0.1.88] exists on host [n01]

INFO[0090] Starting container [rke-log-linker] on host [n01], try #1

INFO[0090] [worker] Successfully started [rke-log-linker] container on host [n01]

INFO[0091] Removing container [rke-log-linker] on host [n01], try #1

INFO[0091] [remove/rke-log-linker] Successfully removed container on host [n01]

INFO[0091] [worker] Successfully started Worker Plane..

INFO[0091] Image [rancher/rke-tools:v0.1.88] exists on host [n01]

INFO[0091] Image [rancher/rke-tools:v0.1.88] exists on host [m01]

INFO[0091] Starting container [rke-log-cleaner] on host [n01], try #1

INFO[0091] Starting container [rke-log-cleaner] on host [m01], try #1

INFO[0091] [cleanup] Successfully started [rke-log-cleaner] container on host [m01]

INFO[0091] Removing container [rke-log-cleaner] on host [m01], try #1

INFO[0091] [cleanup] Successfully started [rke-log-cleaner] container on host [n01]

INFO[0091] Removing container [rke-log-cleaner] on host [n01], try #1

INFO[0091] [remove/rke-log-cleaner] Successfully removed container on host [m01]

INFO[0091] [remove/rke-log-cleaner] Successfully removed container on host [n01]

INFO[0091] [sync] Syncing nodes Labels and Taints

INFO[0091] [sync] Successfully synced nodes Labels and Taints

INFO[0091] [network] Setting up network plugin: calico

INFO[0091] [addons] Saving ConfigMap for addon rke-network-plugin to Kubernetes

INFO[0091] [addons] Successfully saved ConfigMap for addon rke-network-plugin to Kubernetes

INFO[0091] [addons] Executing deploy job rke-network-plugin

INFO[0097] [addons] Setting up coredns

INFO[0097] [addons] Saving ConfigMap for addon rke-coredns-addon to Kubernetes

INFO[0097] [addons] Successfully saved ConfigMap for addon rke-coredns-addon to Kubernetes

INFO[0097] [addons] Executing deploy job rke-coredns-addon

INFO[0102] [addons] CoreDNS deployed successfully

INFO[0102] [dns] DNS provider coredns deployed successfully

INFO[0102] [addons] Setting up Metrics Server

INFO[0102] [addons] Saving ConfigMap for addon rke-metrics-addon to Kubernetes

INFO[0102] [addons] Successfully saved ConfigMap for addon rke-metrics-addon to Kubernetes

INFO[0102] [addons] Executing deploy job rke-metrics-addon

INFO[0107] [addons] Metrics Server deployed successfully

INFO[0107] [ingress] Setting up nginx ingress controller

INFO[0107] [ingress] removing admission batch jobs if they exist

INFO[0107] [addons] Saving ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[0107] [addons] Successfully saved ConfigMap for addon rke-ingress-controller to Kubernetes

INFO[0107] [addons] Executing deploy job rke-ingress-controller

INFO[0112] [ingress] removing default backend service and deployment if they exist

INFO[0112] [ingress] ingress controller nginx deployed successfully

INFO[0112] [addons] Setting up user addons

INFO[0112] [addons] no user addons defined

INFO[0112] Finished building Kubernetes cluster successfully # 看到这个信息就部署启动完成了安装 Kuebctl

# 1.下载 kubectl 二进制文件

wget https://cdn.dl.k8s.io/release/v1.23.16/bin/linux/amd64/kubectl -O /usr/local/bin/kubectl

# 2.赋予可执行权限

chmod +x /usr/local/bin/kubectl

# 3.验证 kubectl 版本

[rke@m01 ~]$ kubectl version

Client Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.16", GitCommit:"60e5135f758b6e43d0523b3277e8d34b4ab3801f", GitTreeState:"clean", BuildDate:"2023-01-18T16:01:10Z", GoVersion:"go1.19.5", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"23", GitVersion:"v1.23.16", GitCommit:"60e5135f758b6e43d0523b3277e8d34b4ab3801f", GitTreeState:"clean", BuildDate:"2023-01-18T15:54:23Z", GoVersion:"go1.19.5", Compiler:"gc", Platform:"linux/amd64"}

# 4.使用 rke 用户配置 kubectl 命令,以后将使用 rke 用户管理集群

[rke@m01 ~]$ ls

cluster.rkestate cluster.yml kube_config_cluster.yml

[rke@m01 ~]$ mkdir ~/.kube

[rke@m01 ~]$ cp kube_config_cluster.yml ~/.kube

[rke@m01 ~]$ cp ~/.kube/kube_config_cluster.yml ~/.kube/config

[rke@m01 ~]$ echo "source <(kubectl completion bash)" >> ~/.bashrc

[rke@m01 ~]$ source ~/.bashrc

# 5.备份集群部署配置文件,把 cluster.yml 和 cluster.rkestate 也备份一份过来,以免丢失

[rke@m01 ~]$ cp cluster.yml ~/.kube/

[rke@m01 ~]$ cp cluster.rkestate ~/.kube/

# 6.查看集群状态

[rke@m01 ~]$ kubectl get nodes -owide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

m01 Ready controlplane,etcd 24h v1.23.16 192.168.2.71 <none> Rocky Linux 8.10 (Green Obsidian) 5.4.289-1.el8.elrepo.x86_64 docker://20.10.24

n01 Ready worker 24h v1.23.16 192.168.2.72 <none> Rocky Linux 8.10 (Green Obsidian) 5.4.289-1.el8.elrepo.x86_64 docker://20.10.24

[rke@m01 ~]$ kubectl get pods -A -owide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

ingress-nginx ingress-nginx-admission-create-c4lcm 0/1 Completed 0 24h 10.42.77.2 n01 <none> <none>

ingress-nginx ingress-nginx-admission-patch-zpw72 0/1 Completed 0 24h 10.42.77.3 n01 <none> <none>

ingress-nginx nginx-ingress-controller-tv9sq 1/1 Running 0 24h 10.42.77.7 n01 <none> <none>

kube-system calico-kube-controllers-56b7c9f8d-chftd 1/1 Running 0 24h 10.42.77.5 n01 <none> <none>

kube-system calico-node-57chf 1/1 Running 0 24h 192.168.2.71 m01 <none> <none>

kube-system calico-node-cx2ld 1/1 Running 0 24h 192.168.2.72 n01 <none> <none>

kube-system coredns-548ff45b67-gvbdb 1/1 Running 0 24h 10.42.77.1 n01 <none> <none>

kube-system coredns-autoscaler-d5944f655-f4ljx 1/1 Running 0 24h 10.42.77.4 n01 <none> <none>

kube-system metrics-server-5c4895ffbd-hh9mv 1/1 Running 0 24h 10.42.77.6 n01 <none> <none>

kube-system rke-coredns-addon-deploy-job-6svz4 0/1 Completed 0 24h 192.168.2.71 m01 <none> <none>

kube-system rke-ingress-controller-deploy-job-9sxsw 0/1 Completed 0 24h 192.168.2.71 m01 <none> <none>

kube-system rke-metrics-addon-deploy-job-ccs85 0/1 Completed 0 24h 192.168.2.71 m01 <none> <none>

kube-system rke-network-plugin-deploy-job-nsjb6 0/1 Completed 0 24h 192.168.2.71 m01 <none> <none>部署 Rancher 可视化面板

我们使用 Rancher 来管理 K8S 集群,UI界面比较适合开发的同学,部署 Rancher ,有 2 种方法:

- 一是在 K8S 集群内部部署,直接配置管理集群

- 二是单独部署 Rancher 应用,导入集群(不限制 K8S 部署方式)

为了简单快速实现部署,我们直接在 192.168.2.77 服务器上,使用 Docker 服务启动一个 Rancher 应用,启动方法如下:

# 1.启动 Rancher 服务, 注意 Rancher 应用与 K8S 版本适配关系

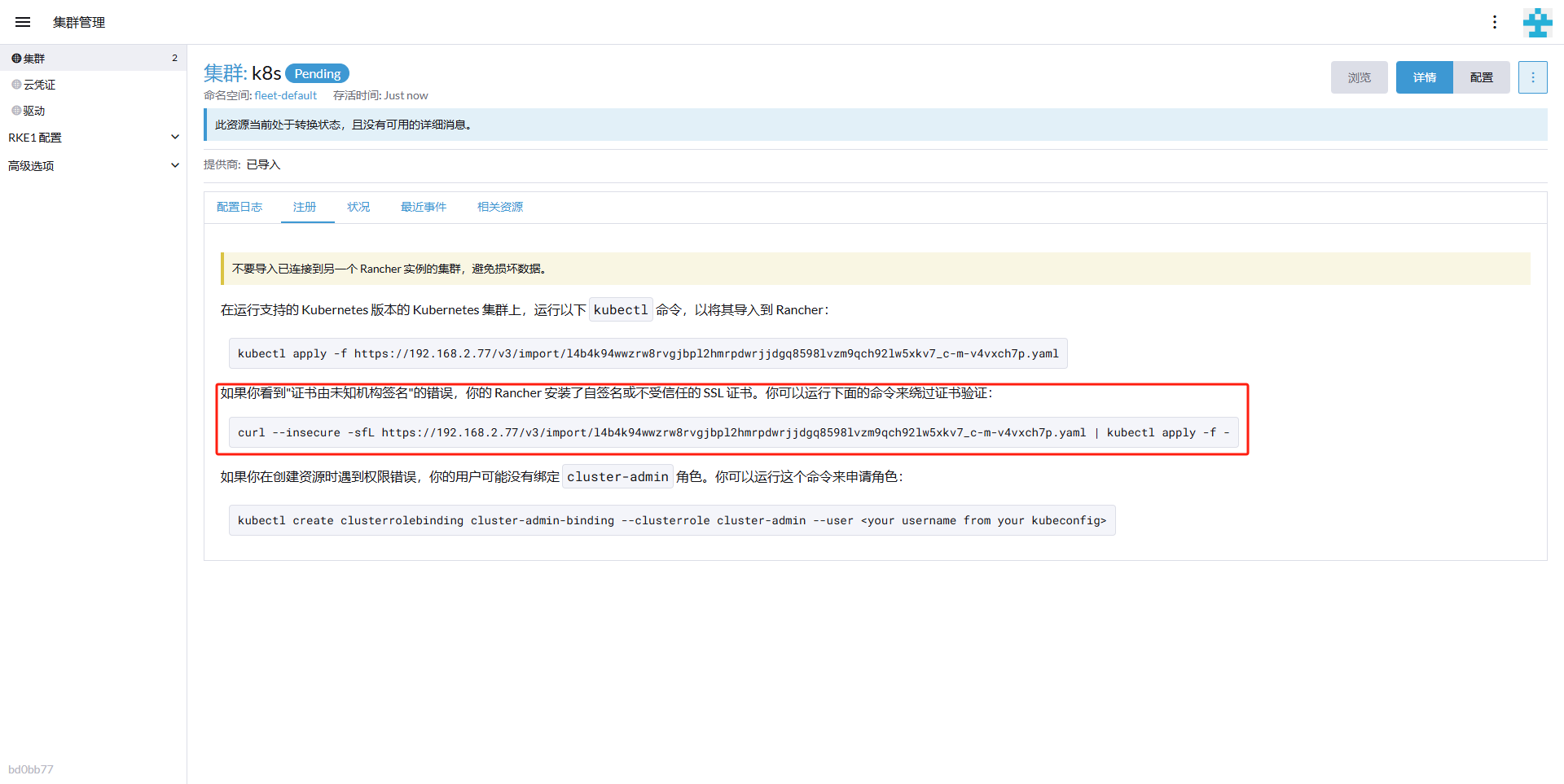

docker run -d --restart=unless-stopped -p 80:80 -p 443:443 --privileged rancher/rancher:v2.7-head启动完成后,我们访问 https://192.168.2.77/ ,我们根据界面引导,登入 Rancher 管理界面,在集群管理功能中根据界面引导、填好相关信息,复制 Agent 部署信息到 m01 节点上执行

# 在界面点击创建后,我们会得到一条部署命令,我们在 m01 节点上执行部署

[rke@m01 ~]$ curl --insecure -sfL https://192.168.2.77/v3/import/l4b4k94wwzrw8rvgjbpl2hmrpdwrjjdgq8598lvzm9qch92lw5xkv7_c-m-v4vxch7p.yaml | kubectl apply -f -

# 启动完成后如下,我们会发现多了一个 namespace ,我们就可以在面板上看到新加入的集群信息了

[rke@m01 ~]$ kubectl get pods -n cattle-system

NAME READY STATUS RESTARTS AGE

cattle-cluster-agent-dc6f5d649-9jgwh 1/1 Running 0 10h

cattle-cluster-agent-dc6f5d649-bp7rx 1/1 Running 0 10h

rancher-webhook-f874c7d7-j8cc4 1/1 Running 0 10h

Rancher Agents

Rancher 通过 cluster-agent 与集群进行通信(通过 cattle-cluster-agent 调用 Kubernetes API 与集群通讯),并通过 cattle-node-agent 与节点进行通信

如果 cattle-cluster-agent 无法连接到已有的 Rancher Server 也就是 server-url,集群将保留在 Pending 状态,错误显示为 Waiting for full cluster configuration

总结

- 我们通过 RKE 单机模式部署了 K8S 集群,并实现了 Rancher 可视化界面的方式管理 K8S 集群,通过这篇文章,大家可以对集群部署过程有个简单的了解

- 后续我会结合这篇文章内容对整个集群的部署方式和部署内容进行扩展,比如,RKE 集群模式、K8S 集群高可用、使用 Helm 部署 Rancher 应用等等